Apple has taken a bold step into the future of coding with a fascinating experiment that could change how developers build user interfaces. In a newly published research paper, the company revealed how it trained an AI model to effectively teach itself how to write good SwiftUI code, even though the original dataset had almost no SwiftUI examples to begin with.

The project, called UICoder, was built on top of StarChat-Beta, an open-source coding model. Apple’s researchers recognized a big problem: while large language models are improving in coding, they often fail when it comes to generating clean, functional user interface code. This is largely because high-quality UI code is rare in training datasets, sometimes making up less than one percent of the total examples.

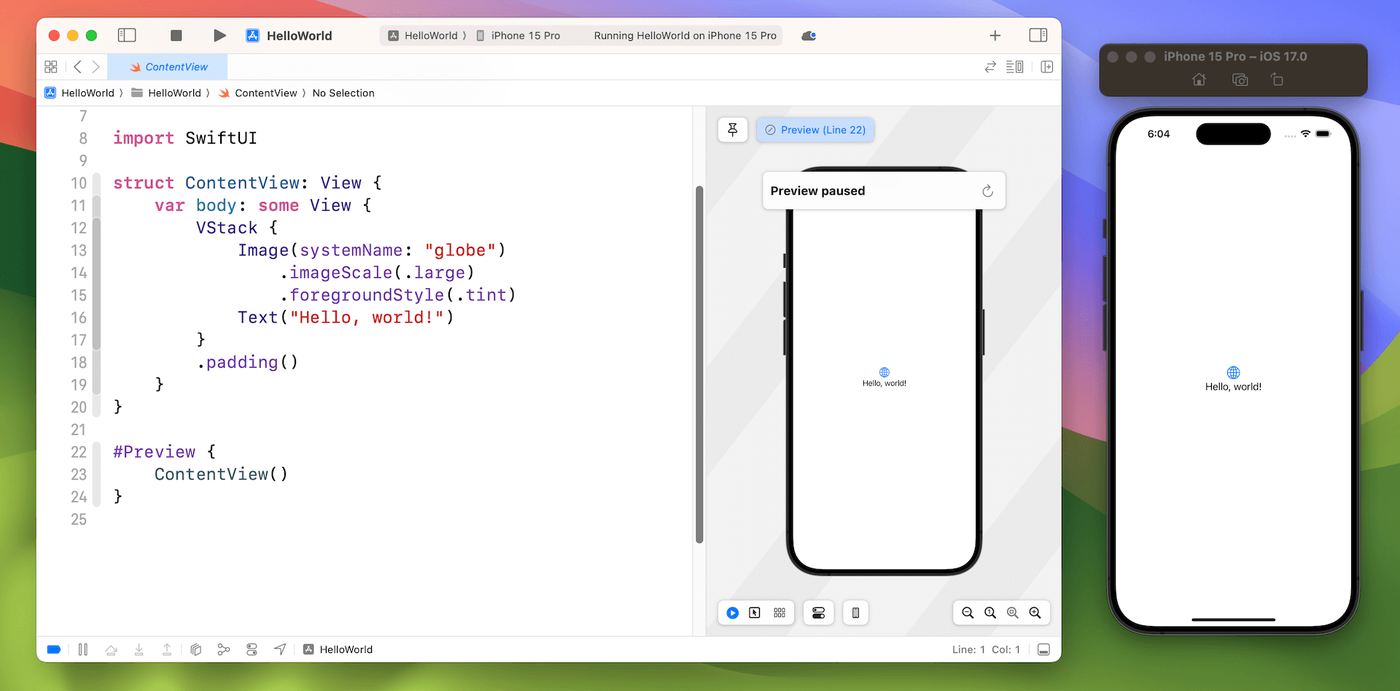

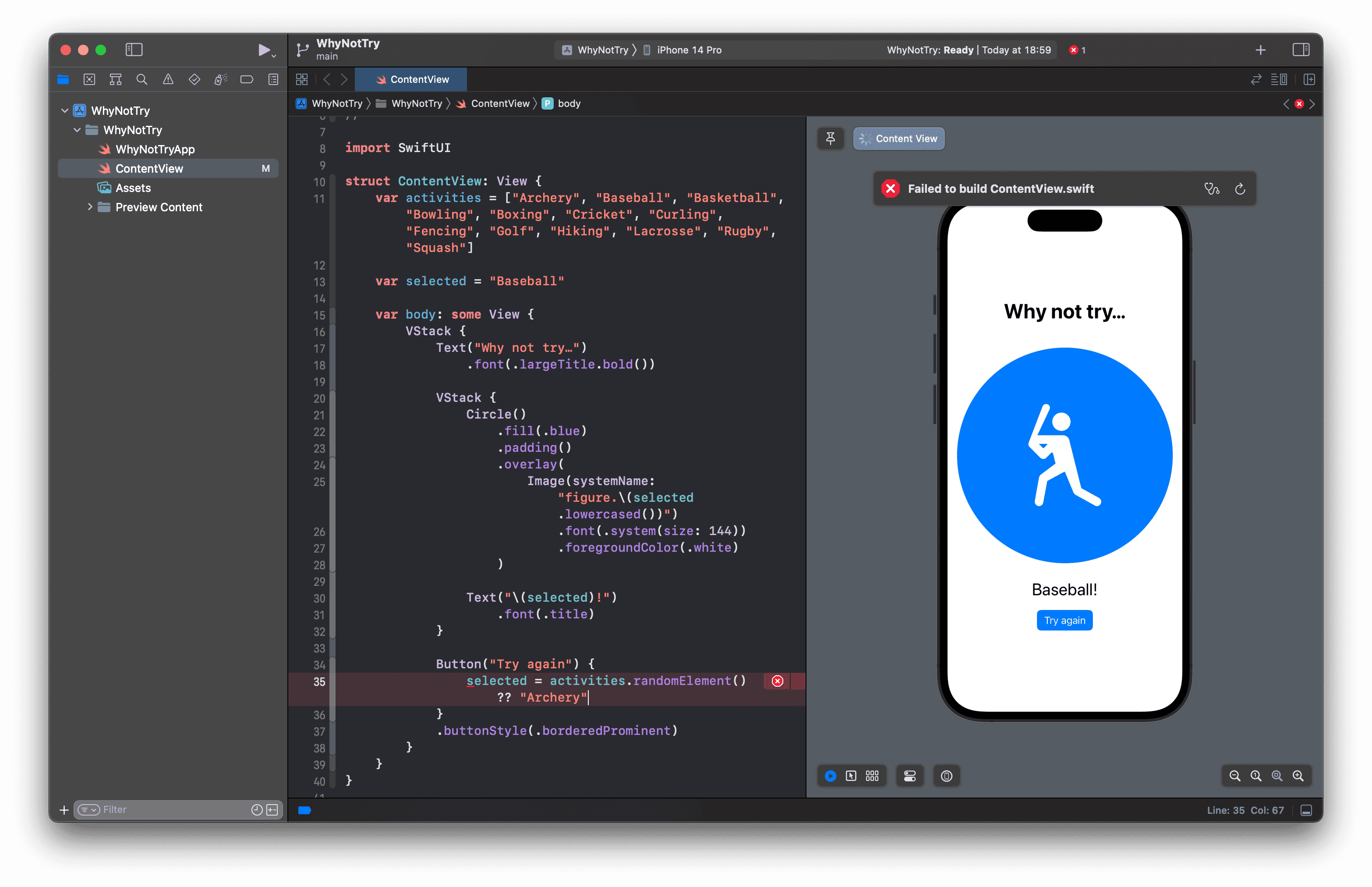

To overcome this, Apple designed a clever process. First, the model was fed natural language descriptions of user interfaces and asked to generate SwiftUI programs. Every single output was compiled using Apple’s Swift compiler to check if it would run. Those that failed to compile, looked irrelevant, or duplicated earlier attempts were discarded. The remaining code snippets were then reviewed by GPT-4V, a vision-language model that compared the compiled UI with the original description. Only the best results were kept and added to a new training set.

This cycle was repeated several times. Each new version of the model produced better code than before, which in turn created a cleaner dataset for the next round of training. After five iterations, Apple had built a dataset of nearly one million SwiftUI programs and a model that consistently produced high-quality, functional interfaces.

The results were striking. UICoder not only outperformed its base model but also came close to matching GPT-4 in overall quality. In fact, it surpassed GPT-4 in one key area: compilation success rate. That means its code was more likely to actually work when run, a crucial factor for real-world development.

Perhaps the most surprising detail is that the base StarChat-Beta model had little to no SwiftUI training data. Swift repositories were accidentally excluded from the original dataset, and among thousands of instruction examples, only one contained Swift code. This means that UICoder’s ability to generate SwiftUI was not inherited but learned almost entirely from the synthetic data it created for itself.

Apple’s researchers believe this method could go far beyond SwiftUI. The same self-improving feedback loop could be applied to other programming languages and UI frameworks, potentially creating models that can master coding domains where training data is scarce.

For developers, this research is a glimpse into a future where AI could become a reliable partner in building applications, handling the repetitive or tricky parts of coding while leaving the creative vision to humans. It also underscores Apple’s deep investment in blending artificial intelligence with practical tools for its ecosystem.

As AI continues to reshape the landscape of programming, UICoder is a reminder that the next big breakthroughs might not come from feeding models more data but from teaching them how to create and refine their own.

For the latest updates on AI research, coding breakthroughs, and all things tech, follow Tech Moves on Instagram and Facebook.